Cache Management

This page discusses using a distributed cache in Stardog to boost performance for a variety of use cases.

Page Contents

Overview

In the 6.2.0 release, Stardog introduced the notion of a distributed cache. A set of cached datasets can be run in conjunction with a Stardog server or cluster. A dataset can be an entire local graph or a virtual graph. This feature gives users the following abilities:

Reduce Load on Upstream Database Servers

When using virtual graphs, it can be the case that the upstream server is slow, overworked, far away, or lacks operational capacity. This feature addresses this by allowing operators to create a cached dataset running in its own node. In this way, the upstream database can be largely avoided, and cache refreshes can be scheduled for times when its workload is lighter.

Read Scale-out for a Stardog Cluster

Cache nodes allow operators to add read capacity of slowly moving data to a cluster without affecting write capacity. The Stardog cluster is a consistent database that replicates out writes to every node, so as you add consistent read nodes, write capacity is strained. However, when serving slowly moving data that doesn’t need to be fully consistent, a cache graph node can be added to provide additional read capacity.

Partial Materialization of Slowly Changing Data

A cached dataset can be created for a virtual graph that maps to a portion of a database that does not update frequently. That dataset is used in conjunction with another uncached virtual graph mapped to the portions of the database that updates more frequently. This hybrid approach can be used to balance performance and freshness.

Architecture

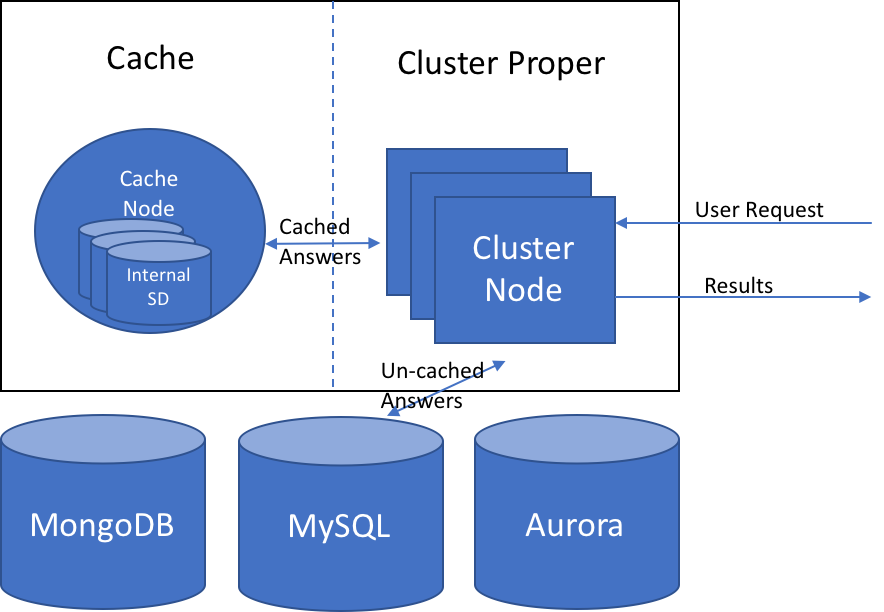

Running inside of a Stardog server (either a cluster or single node) is a component called the cache manager. The cache manager is responsible for tracking what caches exist, where they are, and what is in them. The query planner must work with the cache manager to determine whether or not it can use a cache in the plan.

Cache Targets

Cache targets are separate processes that look a lot like a single-node Stardog server on the inside. They contain a single database into which cached information is loaded and updated. Many caches can be on a single cache target. How to balance them is up to each operator as they consider their own resource and locality needs.

The following diagram shows how the distributed cache can be used to answer queries, where some of the data is cached, and some remains in its original source.

Setting Up A Distributed Cache

To set up Stardog with a distributed cache, first start a Stardog server as described in Administering Stardog.

A cache node/target should be the same Stardog version as the cluster (or server) it is serving.

For every cache target needed, another Stardog server must be run. Stardog servers are configured to be cache targets with the following options in the stardog.properties:

# Flag to run this server as a cache target

cache.target.enabled=true

# If using the cache target with a cluster, we need to tell it where

# the cluster's zookeeper installation is running

pack.zookeeper.address=196.69.68.1:2180,196.69.68.2:2180,196.69.68.3:2180

# Flag to automatically register this cache target on startup. This is

# only applicable when using a cluster

cache.target.autoregister=false

# The name to use for this cache target on auto register. This defaults

# to the hostname

cache.target.name = "mycache"

Once both Stardog and the cache target are running, we need to register the cache target with Stardog. That is done with the cache target command:

$ stardog-admin --server http://<cluster IP>:5820 cache target add <target name> <target hostname>:5820 admin <admin pw>

Once Stardog knows of the existing cache target, datasets can be cached on it. To cache a graph, run a cache create command similar to the following:

$ stardog-admin --server http://<cluster ip>:5820 cache create cache://cache1 --graph virtual://dataset --target ctarget --database movies

This will create a cache named cache://cache1, which will hold the contents of the virtual graph virtual://dataset, which is associated with the database movies and store that cache on the target ctarget.